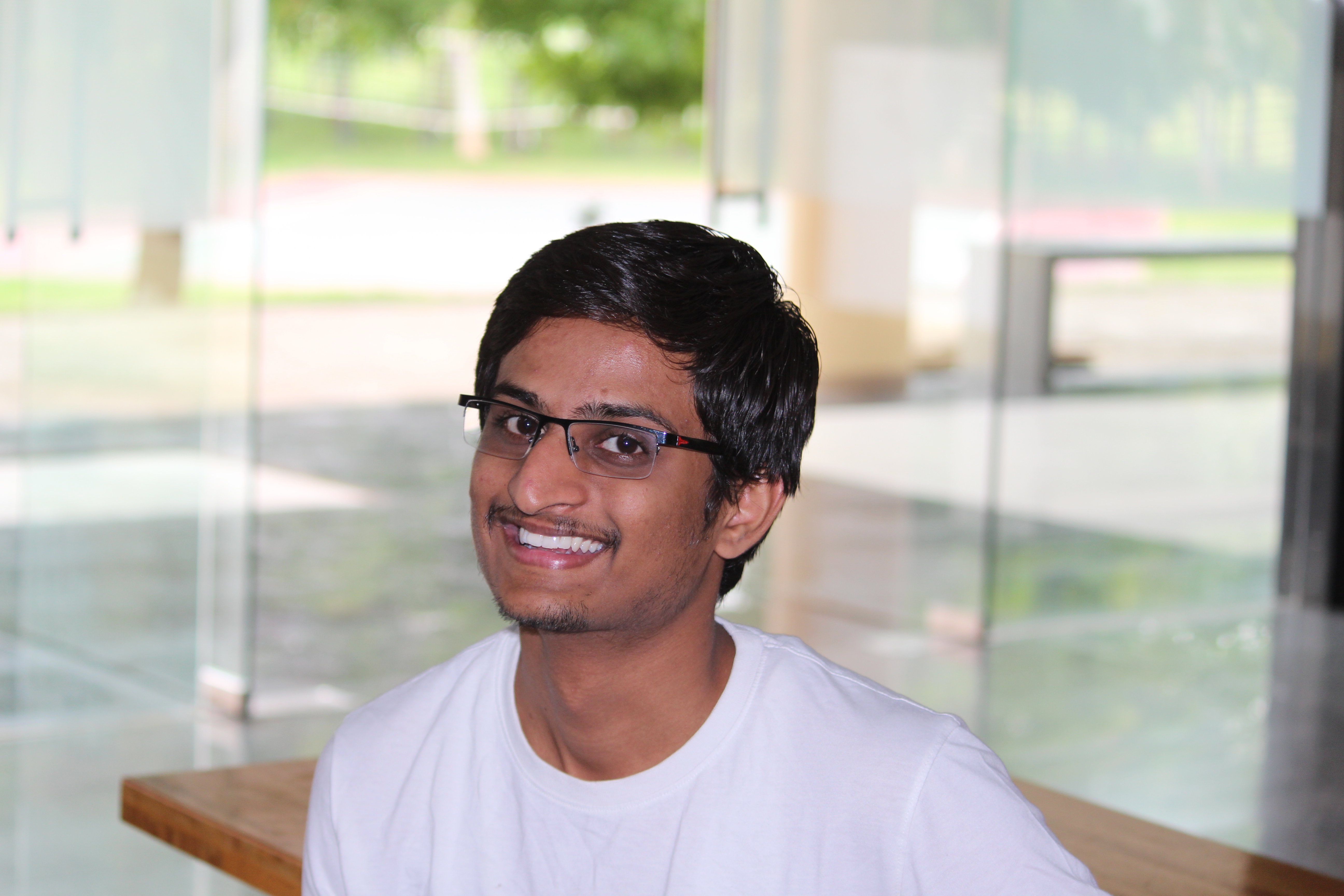

Lokesh Chandak

- Role

Software Engineer

- Years of Experience

5.2 years

Skillsets

- LLAMA

- Bootstrap

- Claude

- Copilot Studio

- Dataverse

- Docker

- Elasticsearch

- FastAPI

- Fiass

- Flask

- Git

- Glean

- Hugging Face

- Kubernetes

- Azure Blob

- MongoDB

- MS SQL

- Mudblazor

- Pinecone

- Postman

- Power BI

- Power virtual agent

- Python rpa

- Semantic Kernel

- Streamlit

- Visual Studio

- VS Code

- CI/CD

- Python

- SQL

- C#

- Blazor server

- .NET Core - 4 Years

- LangChain

- Microsoft Azure

- Power Automate

- Salesforce

- Sprinklr

- AutoGPT

- Azure openai

- JavaScript - 3 Years

- DevOps

- jQuery

- LangGraph

- LlamaIndex

- Microsoft Teams

- rag

- semantic search

- Service Cloud

- Slack

- UiPath

- Antropic

- Azure AI Search

Vetted For

- Roles & Skills

- Results

- Details

- AI Chatbot Developer (Remote)AI Screening

- 74%

- Skills assessed :CI/CD, AI chatbot, Natural Language Processing (NLP), AWS, Azure, Docker, Google Cloud Platform, Kubernetes, machine_learning, Type Script

- Score: 67/90

Professional Summary

- Aug, 2023 - Present2 yr 2 months

Software Engineer

Cohesity - Dec, 2020 - Jul, 20232 yr 7 months

Software Engineer

Acuvate Software

Applications & Tools Known

Python

C#

UiPath

Azure DevOps Server

Azure

Microsoft Bot Framework

Microsoft SQL Server

Microsoft Power Platform

Bitbucket

Sourcetree

Elasticsearch

LangChain

.NET Core

MongoDB

Postman

Microsoft Azure

ElasticSearch

.jpg)

Grafana

Power BI

Python Automation

Power Automate

Azure Functions

BitBucket

Git

CI/CD Pipelines

Work History

Software Engineer

CohesitySoftware Engineer

Acuvate SoftwareAchievements

- Won 2nd Prize in Innovation Idea Competition presented on Brain-controlled Mobile Gantry Robot held on National Science Day 2020.

- Won 1st Prize in Machine Learning Internship for the project (Food Snap).

- Student Council Member of NMIMS University for the year 2017-2018.

- 2nd Prize in Innovation Idea Competition on Brain-controlled Mobile Gantry Robot

- 1st Prize in Machine Learning Internship for the project (Food Snap)

- Student Council Member of NMIMS University for the year 2017-2018

Major Projects

MarketMindAI Agentic Chatbot for Market Insights and Predictions

Mobile Gantry Robot for Pick and Place Application

Smart Home Automation

Food Recognition System (Food Snap)

IoT-based LPG Cylinder Monitoring System

Education

MBA in AI & ML

D.Y. Patil (2025)PG Diploma in Industrial Robotics

RTMNUs OCE (2020)B.Tech Mechatronics

Mukesh Patel School of Technology and Management Studies (2019)

Certifications

Microsoft certified: designing and implementing microsoft devops solutions (az-400), feb 2023

Microsoft certified: power platform fundamentals (pl-900), september 2022

Microsoft certified: azure ai fundamentals (ai-900), july 2022

Microsoft certified : power platform fundamentals (pl-900), september 2022

Microsoft certified : designing and implementing microsoft devops solutions (az-400), feb 2023

Microsoft certified: azure fundamentals (az-900), june 2022

Microsoft certified : azure ai fundamentals (ai-900), july 2022

Microsoft certified : azure fundamentals (az-900), june 2022

Interests

AI-interview Questions & Answers

Sure. So I have completed my b tech in 2019 from as a mechatronics engineer. After that, I completed my post graduation diploma in p in industrial robotics, where we study about the robotics. Later later, I joined, uh, company AcuVet, where we we as a chatbot developer, and we develop so many chatbots there. And, um, we have rolled out 15 plus chatbot in WhatsApp plus plus there are 3223 Instagram WhatsApps. Then later, we develop, uh, Teams channel chatbot where we integrate it. And so many text tags, we are also using, like, Microsoft power virtual agents. After that, we use Microsoft bot framework SDK in dot net. Then we created a portal from scratch using the Blazer applications so that we can develop, uh, a flow. So from that portal you can train your chatbot and you can analyze it later. Later I joined the Veritas company where also we developed a chatbot where we deliver 5 plus chatbot on Teams channel. And coming to the background, like, I I like to I love to ride a bicycles, and I always ride in the morning. Also, we I go to gym so that I can have a fit body. That's it. Thank you.

What techniques have you used to troubleshoot a chatbot that is not accurately recognized? User intents. Okay. So first of all, wherever I will I'm using the NLP, right, where we train the intent entities. And also, currently, in my current project, we used to train it on the language service, which is of from Azure. Earlier, we used to train it on LUIS provided by the language service. So what we do did it there is we train some utterances of the users, and according to that, we train it. And there once we train the transits with particular responses, then we test it in the same portal on Azure platform whether we are getting the accurate results or not. If we are not getting the accurate result, then we retrain it. We try to, train the trans as minimum as possible with the proper keywords so that it can it can recognize using whatever we want to know later later. What we do is, um, after that once if suppose it is not recognized then we also have developed some, uh, framework. It's like feedback mechanism where user will provide once the intent is that and what didn't recognize. So we are providing a feedback to the user once once its conversion is done whether the bot has resolved or not. If he says that it is not resolved and he was looking for something else, then it is like a auto train mechanism so that that a trans will be stored and then that a trans will return to that intent of that result with that particular user utterances. So that next time when the user will come with the same utterance so that what can they what can provide him the accurate results using that intent. This is done for Microsoft. We have developed our NLP also. So in that, we used to play with stuff first where we can use the scores accurately, and it will help to them. So if suppose the intent is not recognized, then we will take it. In the feedback, we will get that. And once it is we'll get that, uh, transits, then we can provide us some stop first and we can analyze it. And with the proper fine tuning, we that transfer log in get that, uh, transfer log in get recognized for that intent particular intent.

In terms of NLP, how would you handle the challenge of homonyms in user input while developing a chatbot. Yeah. This is one of the challenging where we have to check with the homonyms and majorly in NLP. Sometimes the homonyms it was pick pick up. So we will basically try to train that particular trans multiple times of that similar word so that it's probability is getting more. And if sub another word, we will not train or we can train it for the non intent. So that if that particular homonyms comes again, so it will go to the non block. So that particular intent will not get picked up, which we want. So this is one of the things. And if we want homonyms to also get picked up for that particular trances, so we will train with that all the homonyms, or we can provide it in the intents, entities format so that it can also get recognized. And there is a list we can create it as a list. So, basically, what we do is we can create a multiple utterances as a list. And then if it is not, then some words where in a intent as entities for that entities, we can provide it as a list. So there, we can provide some types of homonyms. So if you want to recognize that homonyms, so and then that is it was in a list format. Then after that right? So if someone provide home names or some spelling mistake has come, so NLP search a model, then it will recognize it and it can fulfill that entities for that particular user so that in the next utterances, it will not ask to the users.

Okay. So in chatbot backing system, right, so we built we basically, we'll develop it in such a way so that it can handle a very huge number of user traffics. But if sometimes the user traffic is more right, so we basically upload it to, uh, app services where app service also handles it properly, then there is something known as the activity handler which we use in our chatbot SDK. So based on that activity handler there is the conversation I. D. And user I. D. So with that particular conversation I. D. We will store all the particulars users. Um, what you can say users catchy with considering that the own p I data will not get stored. So with that help of conversation ID, we will get what are the users responses, what user user are in each which flow, what was his last utterance so that we he can what is his next utterance would become up, What is about what response he has provided the results accordingly that it will provide him the feedback. So it will not matter if there was a very huge traffic also. Later, we used to yeah. So in app service, we will provide it. Then Azure bot services, we integrate it so that every conversation ID, we will have appropriate responses to the users. If there was a huge traffic, you suppose 100 or 1000 of users also chatting at the same time, it will not lag to them and it will provide the accurate result. It will not jumble also the responses to each and other people. We used to use the synchronous method inside the flow so that it will all give the proper and appropriate responses and it will not jumbled.

P in understanding various dialects and accents. Okay. So while we test the effectiveness of the chatbot, I think that it can it's it's also there is something called as language understanding. So based on which we can understand which language or in which, uh, lang in which accent the user is saying so that it will convert that accent or suppose it was saying in a Spanish accent or German accent then that is get converted to English as accent, and then English accent is provided to the chatbot so that it will have in the back end only one language, either you say in Hindi, Marathi, English, Spanish, Japanese, anything so that all the process behind the scene will work in English language. And once that preprocess is is done, then we can reconvert that, uh, whatever the response is there to in their particular language. So we particularly do that so that it can understand it can provide all those things will happen. And if it was in English and some essence are different also, then we can train accordingly the intent entities of that particular user so that next time if one times the bot will the next time it should not come again. Uh, again, the issue. So that next time when the user will come, it will provide them the accurate results.

Which design pattern? Uh, basically, we use Microsoft dialogues flows which are, like, uh, which we develop in the composer so that whatever the complex designs also come. So we provide it inside the comp composer, we design its flow. And then it was integrated to Microsoft board framework sdk. So if the complex flows also come, so we handle it with the bot framework SDK as well as the composer. It's depends. So based on channels, specific channels, different types of, uh, what you can say, different types of utterances, accents, all those things will get handled. In the composer flow, then there are some different types of dialogues, if else conditions, then there are multiple switch cases, all those flows. Then in TB, the help of entity intents recognitions, we used to provide the flows to it. So it will get clearly

After reviewing these JavaScript function integration, chatbot responses within existing system identifying potential choose finance function. I can't just pass it to you. Welcome to our service. Okay. We are getting it get by element. Get elements by ID. Okay. So here at, like, in our HTML, we are providing the response data, but we can have some welcome messages, which is open to the chatbot icon, like, hi. How can I help you and all those things? Once you click on it, then the welcome message will be provided and few cards also. Here, we can have provide some cards so that users will know, like, in what areas the chatbot will help us. So while while we are appending the chatbot responses, not in, like, hardcoded, we can provide it as a, uh, what you can say, fetch it from different database or something, and then it will go and pass it to the response message. And it's like a static message, welcome to our service, but we can have some generated responses also by integrating the Azure OpenAI services to it, which we did successfully in our own chatbot. Then but I have not after that, Yeah. And, yep, I think that's it.

Examine the patterns. Snipe it uses a chatbot response system. You can identify and explain an issue regarding the natural language processing logic implementation here from text prop define. Check underscore sentiment. Put text analyst, text blob. Okay. We are using a text blob if sentiment. Okay. Okay. So there are different types of NLP which we can implement. Here, we are using test block, but I preferably feel the test block is not very good. I we have used NLDK. It's give us very good responses for that. And then here, we can't, uh, means we can't help provide, uh, stop words, and then we cannot manipulate by our own, uh, what you can say, the values of that words, like, how much weightage the word will have so that if that were sarcastic word also, if we are providing, so it should analyze. So if you can provide into the stop words with proper accurate weightage, then the sarcastic comments also it can analyze. So here I was not saying that after that if test blog included was directly saying the analysis but I am not sure if analysis contains the polarity of the sentiment. And it it was returning you just what is it like positive? Is it negative response or whatever it is? You are not giving the services. Your service is not that bad. So here it will come not and bad. So it may go to positive as it was considering negative negative things. So the sentiment will make it print as a polarity, like, neutral or negative. But if you if you use an LTK, we can provide if it's come not. So remove the stopper and it was negative. So we can add that weightage like it is this much negative. If bad comes, then this is that much negative, and we can have analysis. Yeah.

Discuss a method that allow a chatbot to retain content across multiple sessions while they speak. User privacy. Yeah. So, basically, we this is the same approach which we are using. So there are multiple sessions. Right? User will come and you will will chat. So there is particular things like conversations ID and user ID. So, particularly, if users come repeated to time on the Teams channel or some other platform. Right? So there is something known as user ID. So we can store that user ID in some cache storage where we can have have some things like which flow it has been. Some data we can store as cache. Then later, we can use it. But considering the privacy policies, we are always mask the all the PII datas of that users. So it will never get stored on the cache storage. So once the user wants to clear the cache story, we will have some functionality where it will clear all the catches so that past whatever the user's conversations have, that is not there inside of the chatbot. But I'm saying not to store all the conversations data into the catch storage. It is like some conversations logs we will have. We will have some, uh, non intense logs. We will have some another logs also, like feedback, what the users has provided a feedback with that particular where we will get store, like, some user ID then conversions ID so that we can know what all things will done. So if multiple sessions also have there, we can have can when users wants to continue with their previous sessions or chat with that with pre its previous com concepts, then we can provide it in detail.

Okay. Um, basically, I was not well versed with Java because we develop a chatbot. I I know the Java and no functionalities. I have developed few applications in Java but for Java which is used for in chatbot I was not aware because as you know, Microsoft is also going to end long term service with Java and Python. He is going to support full time for dotnet and JavaScript. Node. Js. So actually, we develop the chatbots. We choose the technology c sharp as a to develop the chatbot using the bot framework SDK. So, majorly, we work, uh, with c sharp languages where we in the SDK. So if if there was also, like, to interact with multiple database systems concurrently, so, basically, there are few approaches which we use, like, diaper systems. I think Java also will have some diaper systems, or else we can, uh, there which we can interact with the database systems. I think that there are other systems also which we particularly it is based on dot net only. So it's like I was not recollecting the name. I will let you know. But where we can integrate that database directly to have a class where we can call with the connection strings. We can call it to the database. So it's like an call only where we can fetch all those details in the database, but I was not particularly remembering the what method we we used to call it, but I know all the steps.

Yeah. So for multilingual input. Right? So there are so many multilingual inputs we can have. We have developed on chatbot also in my last company, like, accurate such softwares company where we we develop a multilingual chatbot also for which we can have one, um, we use someone integration for multiple multilingual languages, which is provided by, uh, Azure language language service, like, uh, language translator. So what we do is we can fetch out first the user language. We recognize what language it is. Once we understand which language it is, we ask him to convert it to English. Then the English language, we process within our chatbot, uh, chatbot code. And then as per the preprocessing is done, once that all things are done before sending the user response, whatever the language we came to know, like, which in which language users has provided. So in that language, we converted back the response, and we provide it to the user. So what it is then is, like, our we didn't, uh, change our code or anything. We just while calling it before, we provided on middleware where we are providing users to give that language and convert it translate it to English and then so this is one of the multilingual language. Then there is a second option where where only Azure language service for, uh, conversational language understanding, which is like LUIS where we can understand the intended entities. It also provide you the multilingual language services. And then there is a custom question answering service also, which is question answering, like, in KB knowledge base where we can store our all the question answering services. So there also it provide you the multilingual language services. So if you ask something in other language also, it will provide you the proper responses. So this is the second approach, but I will prefer first approach so that you will get the output also in that particular language only to the user. Otherwise, in second approach, you will get answer whatever you stored. Suppose you stored train it in English, then you will will respond to you in English only. Thank you.