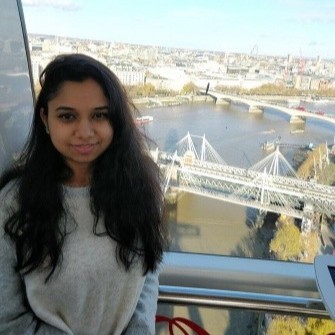

Ponithapunitha girish

- Role

Senior Data Engineer

- Years of Experience

14 years

Skillsets

- MySQL

- AWS

- Hadoop

- Kubernetes

- PySpark

- Core Java

- Big Data

- BI tools

- Control-m scheduling

- Docker

- HDFS

- Hive

- Hue

- Jenkins

- YARN

Vetted For

- Roles & Skills

- Results

- Details

- Big Data Engineer with Streaming Experience (Remote)AI Screening

- 50%

- Skills assessed :Spark, CI/CD, Data Architect, Data Visualization, EAI, ETL, Hive, PowerBI, PySpark, Talend, AWS, Hadoop, JavaScript, 組込みLinux, PHP, Problem Solving Attitude, Shell Scripting, SQL, Tableau

- Score: 45/90

Professional Summary

- Jun, 2022 - Present3 yr 4 months

Senior Data Engineer

Oracle Cerner - Apr, 2016 - Jun, 20226 yr 2 months

Data Analyst / Data Engineer

TCS, Deutsche Bank - Jan, 2015 - Mar, 20161 yr 2 months

Software Engineer

TCS, Visa Europe - Dec, 2009 - Mar, 20122 yr 3 months

Software Engineer Trainee

TCS, Deutsche Bank - Apr, 2012 - Dec, 20142 yr 8 months

Junior Software Engineer

TCS, Credit Suisse

Applications & Tools Known

Hadoop

HDFS

HIVE

MySQL

.png)

Docker

.png)

Jenkins

AWS

Hue

Kubernetes

Work History

Senior Data Engineer

Oracle CernerData Analyst / Data Engineer

TCS, Deutsche BankSoftware Engineer

TCS, Visa EuropeJunior Software Engineer

TCS, Credit SuisseSoftware Engineer Trainee

TCS, Deutsche BankAchievements

- Experienced Senior Data Engineer with strong understanding of PySpark, Hadoop, Core Java and Big Data

- Led developer team achieving 30% increase in project delivery efficiency

- Received On Spot Awards for improving data accuracy and efficiency

- Client Appreciation from Chief Country Officer in Ireland (Dublin)

Major Projects

Real-time Data Processing Pipeline

Education

Bachelor of Engineering in Telecommunication

BMS Institute of Technology (2009)

Certifications

Oracle certified java programmer april 2013

AI-interview Questions & Answers

Hi. I'm Punita, um, Punita, and I am a, um, 14 years of experience, uh, data, um, data engineer. So overall, it's 14 years experience, and, uh, I have around 6 to 7 years of, uh, data engineering experience. So in my past project, wherein I have worked on various data pipelines, like onboarding the data from, uh, to the data massaging and uploading a path that transformed data onto the, uh, to the, uh, s three buckets or to the design location of, uh, Cloudera, um, cloud system. So, uh, that's, uh, pretty much about, uh, myself. Uh, so, uh, I myself, um, I'm a, um, a multitasker and a quick learner. Yeah. So this is pretty much about myself.

Yeah. So in Python based, uh, mod, uh, ETL extract transformation load, the incremental, um, incremental would be based on the data which we would be getting, uh, um, if there it is a batch processing, then we would be, uh, doing with the help of the Hadoop MapReduce. But since it is a Python, so it is good to have the spark and build a a spark, uh, spark tech stack to be used along with the Python flavors. So, uh, we would use the spark of our incremental, uh, data loads.

Yeah. Uh, so, uh, to ensure there is a zero downtime, uh, since we would be using, uh, we we would be using in terms of Spark. Uh, so Spark, since it has, like, in memory computation logic, uh, now we would expect, uh, you know, it would have, like, 0 downtime, uh, during the ETL pipeline deployments. And, also, we would ensure that, uh, this particular, uh, the code would get deployed to the multiple regions and, uh, will have a backup of, uh, 3 times replication factor so that it will reduce the, um, the downtime during, uh, the future pipeline deployments.

For validating the correctness of any ETL process, uh, in any of the BI tools, uh, like, we we would know first whether the specific, um, input location is, um, input data. What we are getting is, um, is structured enough. So if it is not structured, then we would be going ahead and, you know, using the data, uh, um, data cleansing process, uh, which will, uh, which will include, like, uh, which will include, like, you know, removing the delimiters or adding the delimiters and removing the extra spaces and, you know, modifying the, uh, updating the columns if we it if it doesn't have. So, uh, that is one of the, uh, correctness approach we would be following.

Hello? Yeah. So the data integrity would be, uh, would be maintained, uh, would be maintained, uh, within, uh, the transaction SQL database to s 3, uh, by, um, by ensuring that all the data has been uploaded properly, uh, and it is partitioned well using, you know, partition techniques and, uh, the data, which is, like, you know, optimized enough. So this is one of the data integrity approach, uh, but we would follow that, you know, the volume, veracity of the data has been considered. And, uh, even in terms of security purpose, we would use something like I'm principles so that, uh, and ACL, um, ACL, like, um, access control list so that, you know, the data, uh, integrity, uh, privacy, and security also has maintained.

Yeah. Um, so sparks partitioning and caching, uh, is used, um, uh, in a way that, you know, it can perform much better. Uh, and partitioning, um, the part and in terms of partitioning, we would be, uh, we would be, um, considering, uh, uh, coalies as a better partitioning, uh, part partitioning because it'll have less shuffling. And, uh, caching in terms of caching, we would be, uh, we would be considering, like, persist so that you know, wherein we can define the memory levels. So these are, uh, this is the way wherein we can, uh, you know, um, make the performance of the job better.

Structural returns. Where are it? So in this function, I just come across that, you know, it doesn't have any, like, static, uh, uh, kind of a method, and, also, it doesn't return anything. Uh, so it is just a void method wherein, uh, it is saying that, you know, if the particular report type is, uh, HTML, do this. Or if, uh, else, uh, else, um, if it is not if it is PDF, then generate the PDF report. But also, uh, coming to say I see that it is enclosed with a backslash, which is actually not needed. So that is what the first glance I, uh, I I, you know, I look into that. Yeah. Okay.

Okay. So in terms of batch data processing, if both of them are executed, so, uh, since, uh, the tmap 1 is, uh, uh, is having row 1 and updating with t file output delimiter. But if you, again, t map 1 is executed, then it will get overridden with the row 2 and t file output delimiter 2.